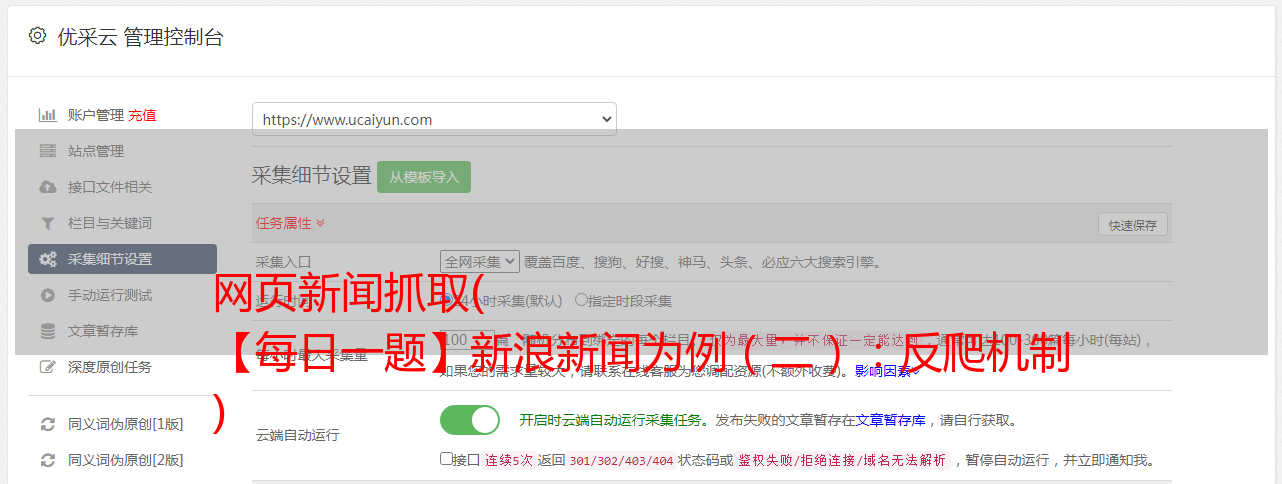

网页新闻抓取( 【每日一题】新浪新闻为例(二):反爬机制 )

优采云 发布时间: 2021-10-10 19:01网页新闻抓取(

【每日一题】新浪新闻为例(二):反爬机制

)

前言

本文文字及图片均来自网络,仅供学习交流之用。它们没有任何商业用途。版权属于原作者。如果您有任何问题,请联系我们进行处理。

如今各大网站的反爬机制可以说是疯狂了,比如大众点评的字符加密、微博登录验证等。相比之下,新闻网站的反爬机制稍微弱一些。所以今天我就以新浪新闻为例,分析一下如何使用Python爬虫按关键词抓取相关新闻。

首先,如果直接从新闻中搜索,你会发现它的内容最多显示20页,所以我们要从新浪首页搜索,这样就没有页数限制了。

网页结构分析

1

<a href=https://www.cnblogs.com/hhh188764/p/span style="color: #800000;""/spanspan style="color: #800000;"javascript:;/spanspan style="color: #800000;""/span onclick=span style="color: #800000;""/spanspan style="color: #800000;"getNewsData('https://interface.sina.cn/homepage/search.d.json?t=&q=%E6%97%85%E6%B8%B8&pf=0&ps=0&page=2')/spanspan style="color: #800000;""/span title=span style="color: #800000;""/spanspan style="color: #800000;"第2页/spanspan style="color: #800000;""/span>2</a>

<a href=https://www.cnblogs.com/hhh188764/p/span style="color: #800000;""/spanspan style="color: #800000;"javascript:;/spanspan style="color: #800000;""/span onclick=span style="color: #800000;""/spanspan style="color: #800000;"getNewsData('https://interface.sina.cn/homepage/search.d.json?t=&q=%E6%97%85%E6%B8%B8&pf=0&ps=0&page=3')/spanspan style="color: #800000;""/span title=span style="color: #800000;""/spanspan style="color: #800000;"第3页/spanspan style="color: #800000;""/span>3</a>

<a href=https://www.cnblogs.com/hhh188764/p/span style="color: #800000;""/spanspan style="color: #800000;"javascript:;/spanspan style="color: #800000;""/span onclick=span style="color: #800000;""/spanspan style="color: #800000;"getNewsData('https://interface.sina.cn/homepage/search.d.json?t=&q=%E6%97%85%E6%B8%B8&pf=0&ps=0&page=4')/spanspan style="color: #800000;""/span title=span style="color: #800000;""/spanspan style="color: #800000;"第4页/spanspan style="color: #800000;""/span>4</a>

<a href=https://www.cnblogs.com/hhh188764/p/span style="color: #800000;""/spanspan style="color: #800000;"javascript:;/spanspan style="color: #800000;""/span onclick=span style="color: #800000;""/spanspan style="color: #800000;"getNewsData('https://interface.sina.cn/homepage/search.d.json?t=&q=%E6%97%85%E6%B8%B8&pf=0&ps=0&page=5')/spanspan style="color: #800000;""/span title=span style="color: #800000;""/spanspan style="color: #800000;"第5页/spanspan style="color: #800000;""/span>5</a>

<a href=https://www.cnblogs.com/hhh188764/p/span style="color: #800000;""/spanspan style="color: #800000;"javascript:;/spanspan style="color: #800000;""/span onclick=span style="color: #800000;""/spanspan style="color: #800000;"getNewsData('https://interface.sina.cn/homepage/search.d.json?t=&q=%E6%97%85%E6%B8%B8&pf=0&ps=0&page=6')/spanspan style="color: #800000;""/span title=span style="color: #800000;""/spanspan style="color: #800000;"第6页/spanspan style="color: #800000;""/span>6</a>

<a href=https://www.cnblogs.com/hhh188764/p/span style="color: #800000;""/spanspan style="color: #800000;"javascript:;/spanspan style="color: #800000;""/span onclick=span style="color: #800000;""/spanspan style="color: #800000;"getNewsData('https://interface.sina.cn/homepage/search.d.json?t=&q=%E6%97%85%E6%B8%B8&pf=0&ps=0&page=7')/spanspan style="color: #800000;""/span title=span style="color: #800000;""/spanspan style="color: #800000;"第7页/spanspan style="color: #800000;""/span>7</a>

<a href=https://www.cnblogs.com/hhh188764/p/span style="color: #800000;""/spanspan style="color: #800000;"javascript:;/spanspan style="color: #800000;""/span onclick=span style="color: #800000;""/spanspan style="color: #800000;"getNewsData('https://interface.sina.cn/homepage/search.d.json?t=&q=%E6%97%85%E6%B8%B8&pf=0&ps=0&page=8')/spanspan style="color: #800000;""/span title=span style="color: #800000;""/spanspan style="color: #800000;"第8页/spanspan style="color: #800000;""/span>8</a>

<a href=https://www.cnblogs.com/hhh188764/p/span style="color: #800000;""/spanspan style="color: #800000;"javascript:;/spanspan style="color: #800000;""/span onclick=span style="color: #800000;""/spanspan style="color: #800000;"getNewsData('https://interface.sina.cn/homepage/search.d.json?t=&q=%E6%97%85%E6%B8%B8&pf=0&ps=0&page=9')/spanspan style="color: #800000;""/span title=span style="color: #800000;""/spanspan style="color: #800000;"第9页/spanspan style="color: #800000;""/span>9</a>

<a href=https://www.cnblogs.com/hhh188764/p/span style="color: #800000;""/spanspan style="color: #800000;"javascript:;/spanspan style="color: #800000;""/span onclick=span style="color: #800000;""/spanspan style="color: #800000;"getNewsData('https://interface.sina.cn/homepage/search.d.json?t=&q=%E6%97%85%E6%B8%B8&pf=0&ps=0&page=10')/spanspan style="color: #800000;""/span title=span style="color: #800000;""/spanspan style="color: #800000;"第10页/spanspan style="color: #800000;""/span>10</a>

<a href=https://www.cnblogs.com/hhh188764/p/span style="color: #800000;""/spanspan style="color: #800000;"javascript:;/spanspan style="color: #800000;""/span onclick=span style="color: #800000;""/spanspan style="color: #800000;"getNewsData('https://interface.sina.cn/homepage/search.d.json?t=&q=%E6%97%85%E6%B8%B8&pf=0&ps=0&page=2');/spanspan style="color: #800000;""/span title=span style="color: #800000;""/spanspan style="color: #800000;"下一页/spanspan style="color: #800000;""/span>下一页</a>

进入新浪网并进行关键字搜索后,我发现页面的URL无论如何都不会改变,但页面内容已经更新。经验告诉我,这是通过ajax完成的,所以我把新浪网页的代码拿下来看了看。看。

显然,每次翻页时,都会通过单击 a 标签向地址发送请求。如果你直接把这个地址放到浏览器的地址栏中,按回车:

恭喜,我遇到了错误

仔细查看html的onclick,发现它调用了一个叫getNewsData的函数,于是在相关的js文件中查找这个函数,可以看到它在每次ajax请求之前都构造了请求的url,并且使用了getRequest,返回数据格式为jsonp(跨域)。

所以我们只需要模仿它的请求格式来获取数据。

var loopnum = 0;

function getNewsData(url){

var oldurl = url;

if(!key){

$("#result").html("无搜索热词");

return false;

}

if(!url){

url = 'https://interface.sina.cn/homepage/search.d.json?q='+encodeURIComponent(key);

}

var stime = getStartDay();

var etime = getEndDay();

url +='&stime='+stime+'&etime='+etime+'&sort=rel&highlight=1&num=10&ie=utf-8'; //'&from=sina_index_hot_words&sort=time&highlight=1&num=10&ie=utf-8';

$.ajax({

type: 'GET',

dataType: 'jsonp',

cache : false,

url:url,

success: //回调函数太长了就不写了

})

发送请求

import requests

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:74.0) Gecko/20100101 Firefox/74.0",

}

params = {

"t":"",

"q":"旅游",

"pf":"0",

"ps":"0",

"page":"1",

"stime":"2019-03-30",

"etime":"2020-03-31",

"sort":"rel",

"highlight":"1",

"num":"10",

"ie":"utf-8"

}

response = requests.get("https://interface.sina.cn/homepage/search.d.json?", params=params, headers=headers)

print(response)

这次我使用了requests库,构造了相同的url,并发送了请求。收到的结果是一个冷的403 Forbidden:

所以回到网站看看哪里出了问题

在开发者工具中找到返回的json文件,查看请求头,发现它的请求头中收录一个cookie,所以我们在构造头时直接复制它的请求头即可。再次运行,response200!剩下的就简单了,解析返回的数据,写入Excel即可。

完整代码

import requests

import json

import xlwt

def getData(page, news):

headers = {

"Host": "interface.sina.cn",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:74.0) Gecko/20100101 Firefox/74.0",

"Accept": "*/*",

"Accept-Language": "zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2",

"Accept-Encoding": "gzip, deflate, br",

"Connection": "keep-alive",

"Referer": r"http://www.sina.com.cn/mid/search.shtml?range=all&c=news&q=%E6%97%85%E6%B8%B8&from=home&ie=utf-8",

"Cookie": "ustat=__172.16.93.31_1580710312_0.68442000; genTime=1580710312; vt=99; Apache=9855012519393.69.1585552043971; SINAGLOBAL=9855012519393.69.1585552043971; ULV=1585552043972:1:1:1:9855012519393.69.1585552043971:; historyRecord={'href':'https://news.sina.cn/','refer':'https://sina.cn/'}; SMART=0; dfz_loc=gd-default",

"TE": "Trailers"

}

params = {

"t":"",

"q":"旅游",

"pf":"0",

"ps":"0",

"page":page,

"stime":"2019-03-30",

"etime":"2020-03-31",

"sort":"rel",

"highlight":"1",

"num":"10",

"ie":"utf-8"

}

response = requests.get("https://interface.sina.cn/homepage/search.d.json?", params=params, headers=headers)

dic = json.loads(response.text)

news += dic["result"]["list"]

return news

def writeData(news):

workbook = xlwt.Workbook(encoding = 'utf-8')

worksheet = workbook.add_sheet('MySheet')

worksheet.write(0, 0, "标题")

worksheet.write(0, 1, "时间")

worksheet.write(0, 2, "媒体")

worksheet.write(0, 3, "网址")

for i in range(len(news)):

print(news[i])

worksheet.write(i+1, 0, news[i]["origin_title"])

worksheet.write(i+1, 1, news[i]["datetime"])

worksheet.write(i+1, 2, news[i]["media"])

worksheet.write(i+1, 3, news[i]["url"])

workbook.save('data.xls')

def main():

news = []

for i in range(1,501):

news = getData(i, news)

writeData(news)

if __name__ == '__main__':

main()

最后结果