前往韦世东的技术专栏收获爬虫架构/爬虫逆向/存储引擎/消息

优采云 发布时间: 2021-05-17 01:28

前往韦世东的技术专栏收获爬虫架构/爬虫逆向/存储引擎/消息

转到Wei Shidong的技术专栏,以获取爬虫架构/爬虫反向/存储引擎/消息队列/ Python / Golang相关知识

本文文章的主要目的是告诉您如何配置Prometheus,以便它可以使用指定的Web Api接口采集索引数据。 文章中使用的情况是NGINX的采集配置。从设置了用户名和密码的NGINX数据索引页中的采集数据中,本文文章的标题可能是nginx的prometheus 采集配置nginx或prometheus 采集基本身份验证。

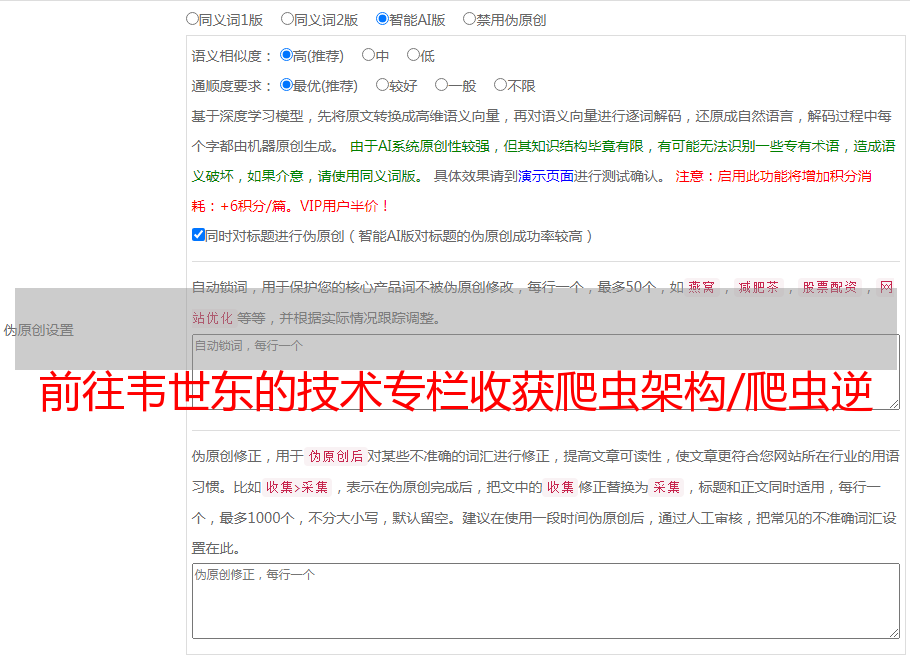

上图显示了配置完成后在Grafana中配置模板的效果。

曾经使用Prometheus的朋友必须知道如何配置address:port服务。例如,当采集有关某个Redis的信息时,可以这样编写配置:

- job_name: 'redis'

static_configs:

- targets: ['11.22.33.58:6087']

注意:在上述情况下,假设Redis Exporter的地址和端口为1 1. 2 2. 3 3. 58:6087。

这是最简单,最知名的方法。但是,如果您要监视指定的Web API,则不能以这种方式编写。如果您没有看到此文章,则可以在这样的搜索引擎中进行搜索:

不幸的是,我找不到任何有效的信息(现在是2021年3月),基本上我只能找到坑。

条件假设

假设我们现在需要从具有地址的界面中采集相关的Prometheus监视指标,并且该界面使用基本身份验证(假设用户名为weishidong并且密码为0099887kk)进行基本授权验证。

配置练习操作

如果您填写之前看到的Prometheus配置,则很有可能这样编写配置:

- job_name: 'web'

static_configs:

- targets: ['http://www.weishidong.com/status/format/prometheus']

basic_auth:

username: weishidong

password: 0099887kk

保存配置文件并重新启动服务后,您将发现无法以这种方式采集数据,这很糟糕。

官方配置指南

刚才的操作真的很糟糕。当我们遇到无法理解的问题时,我们当然会转到官方文档-> Prometheus Configuration。建议从上至下阅读,但是如果您急于忙,可以直接进入本节。官方示例如下(内容太多,这里仅是与本文相关的部分,建议您阅读原文):

# The job name assigned to scraped metrics by default.

job_name:

# How frequently to scrape targets from this job.

[ scrape_interval: | default = ]

# Per-scrape timeout when scraping this job.

[ scrape_timeout: | default = ]

# The HTTP resource path on which to fetch metrics from targets.

[ metrics_path: | default = /metrics ]

# honor_labels controls how Prometheus handles conflicts between labels that are

# already present in scraped data and labels that Prometheus would attach

# server-side ("job" and "instance" labels, manually configured target

# labels, and labels generated by service discovery implementations).

#

# If honor_labels is set to "true", label conflicts are resolved by keeping label

# values from the scraped data and ignoring the conflicting server-side labels.

#

# If honor_labels is set to "false", label conflicts are resolved by renaming

# conflicting labels in the scraped data to "exported_" (for

# example "exported_instance", "exported_job") and then attaching server-side

# labels.

#

# Setting honor_labels to "true" is useful for use cases such as federation and

# scraping the Pushgateway, where all labels specified in the target should be

# preserved.

#

# Note that any globally configured "external_labels" are unaffected by this

# setting. In communication with external systems, they are always applied only

# when a time series does not have a given label yet and are ignored otherwise.

[ honor_labels: | default = false ]

# honor_timestamps controls whether Prometheus respects the timestamps present

# in scraped data.

#

# If honor_timestamps is set to "true", the timestamps of the metrics exposed

# by the target will be used.

#

# If honor_timestamps is set to "false", the timestamps of the metrics exposed

# by the target will be ignored.

[ honor_timestamps: | default = true ]

# Configures the protocol scheme used for requests.

[ scheme: | default = http ]

# Optional HTTP URL parameters.

params:

[ : [, ...] ]

# Sets the `Authorization` header on every scrape request with the

# configured username and password.

# password and password_file are mutually exclusive.

basic_auth:

[ username: ]

[ password: ]

[ password_file: ]

# Sets the `Authorization` header on every scrape request with

# the configured bearer token. It is mutually exclusive with `bearer_token_file`.

[ bearer_token: ]

# Sets the `Authorization` header on every scrape request with the bearer token

# read from the configured file. It is mutually exclusive with `bearer_token`.

[ bearer_token_file: ]

<p>如果仔细看,应该注意一些关键信息:metrics_path和basic_auth。其中,metrics_path用于在使用HTTP类型指示符信息采集时指定路由地址,默认值为/ metrics;默认值为/ metrics。字段basic_auth用于授权验证,此处的密码可以指定密码文件,而不是直接填写纯文本(通常,指定密码文件的安全性比纯文本的安全性高)。