今日头条文章采集软件(【一起爬爬今日头条】热点新闻爬头条热点新闻吧! )

优采云 发布时间: 2022-03-07 06:02今日头条文章采集软件(【一起爬爬今日头条】热点新闻爬头条热点新闻吧!

)

好吧,让我们抓取今天的头条新闻!

今日头条地址:

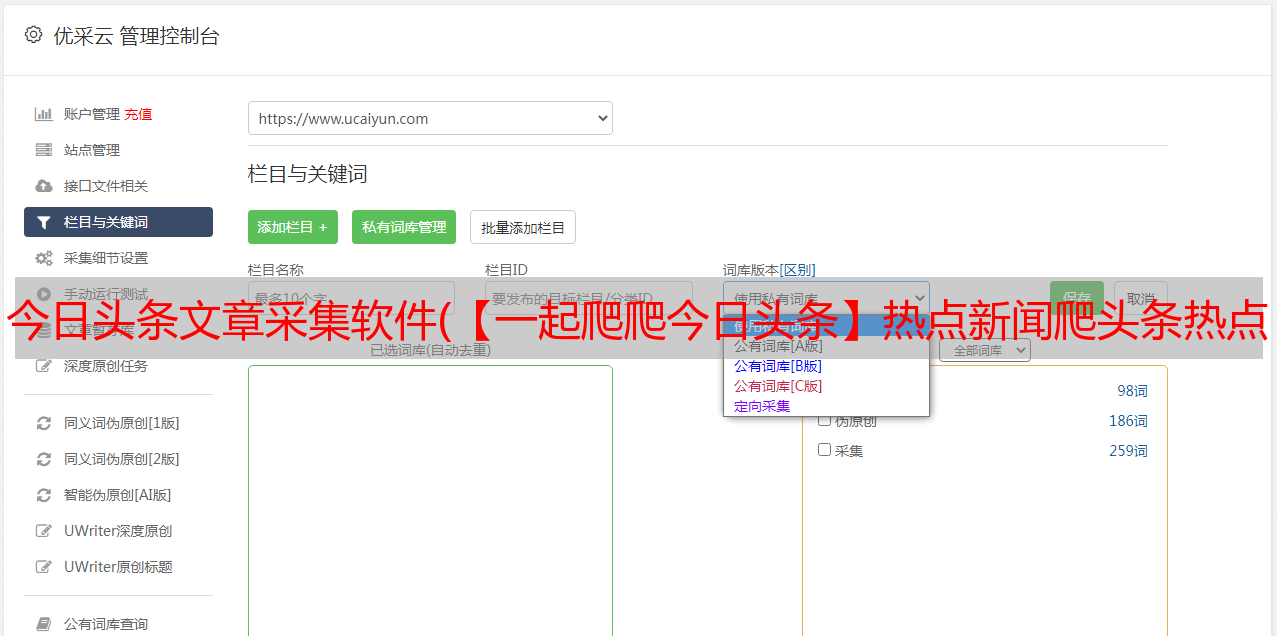

在浏览器中打开今日头条的链接,选择左侧的热点,在浏览器开发者模式下,可以快速找到网络下带有'?category=new_hot...'字样的文件,点击你可以看到它的请求地址。如下图:

请求地址的数据全部存储在data字段中,数据类型为json。如下图:

请求的链接地址是:

1https://www.toutiao.com/api/pc/feed/?category=news_hot&utm_source=toutiao&widen=1&max_behot_time=1577347347&max_behot_time_tmp=1577347347&tadrequire=true&as=A1450EF0A468003&cp=5E04F850E003EE1&_signature=VYMs9gAgEBe5v1fEUcnQ31WDLeAAAuI

2

3

共有9个参数,对比如下表:

max_behot_time是从获取的json数据中获取的。具体数据如下截图所示:

请求地址中有as和cp两个参数,都经过js加密。不过也有相应的加密算法:

加密算法:

1var e = {};

2 e.getHoney = function() {

3 var t = Math.floor((new Date).getTime() / 1e3),

4 e = t.toString(16).toUpperCase(),

5 n = md5(t).toString().toUpperCase();

6 if (8 != e.length) return {

7 as: "479BB4B7254C150",

8 cp: "7E0AC8874BB0985"

9 };

10 for (var o = n.slice(0, 5), i = n.slice(-5), a = "", r = 0; 5 > r; r++) a += o[r] + e[r];

11 for (var l = "", s = 0; 5 > s; s++) l += e[s + 3] + i[s];

12 return {

13 as: "A1" + a + e.slice(-3),

14 cp: e.slice(0, 3) + l + "E1"

15 }

16 }, t.ascp = e

17}(window, document), function() {

18 var t = ascp.getHoney(),

19 e = {

20 path: "/",

21 domain: "i.snssdk.com"

22 };

23 $.cookie("cp", t.cp, e), $.cookie("as", t.as, e), window._honey = t

24}(), Flow.prototype = {

25 init: function() {

26 var t = this;

27 this.url && (t.showState(t.auto_load ? NETWORKTIPS.LOADING : NETWORKTIPS.HASMORE), this.container.on("scrollBottom", function() {

28 t.auto_load && (t.lock || t.has_more && t.loadmore())

29 }), this.list_bottom.on("click", "a", function() {

30 return t.lock = !1, t.loadmore(), !1

31 }))

32 },

33 loadmore: function(t) {

34 this.getData(this.url, this.type, this.param, t)

35 },

36

37

python获取as和cp值的代码如下:

参考博客:

1import time

2import hashlib

3

4def get_as_cp_args():

5 zz ={}

6 now = round(time.time())

7 print (now) # 获取计算机时间

8 e = hex(int(now)).upper()[2:] # hex()转换一个整数对象为十六进制的字符串表示

9 print (e)

10 i = hashlib.md5(str(int(now)).encode("utf8")).hexdigest().upper() # hashlib.md5().hexdigest()创建hash对象并返回16进制结果

11 if len(e)!=8:

12 zz = {'as': "479BB4B7254C150",

13 'cp': "7E0AC8874BB0985"}

14 return zz

15 n=i[:5]

16 a=i[-5:]

17 r = ""

18 s = ""

19 for i in range(5):

20 s = s+n[i]+e[i]

21 for j in range(5):

22 r = r+e[j+3]+a[j]

23 zz = {

24 'as': "A1" + s + e[-3:],

25 'cp': e[0:3] + r + "E1"

26 }

27 print (zz)

28 return zz

29

30

这样一个完整的链接就形成了。还有一点要提的是,即使去掉_signature参数也能得到json数据,所以请求的链接就完成了。

所有代码如下:

1import requests

2import json

3import time

4import hashlib

5import xlwt

6

7# 获取as和cp参数的函数

8def get_as_cp_args():

9 zz ={}

10 now = round(time.time())

11 print (now) # 获取计算机时间

12 e = hex(int(now)).upper()[2:] # hex()转换一个整数对象为十六进制的字符串表示

13 print (e)

14 i = hashlib.md5(str(int(now)).encode("utf8")).hexdigest().upper() # hashlib.md5().hexdigest()创建hash对象并返回16进制结果

15 if len(e)!=8:

16 zz = {'as': "479BB4B7254C150",

17 'cp': "7E0AC8874BB0985"}

18 return zz

19 n=i[:5]

20 a=i[-5:]

21 r = ""

22 s = ""

23 for i in range(5):

24 s = s+n[i]+e[i]

25 for j in range(5):

26 r = r+e[j+3]+a[j]

27 zz = {

28 'as': "A1" + s + e[-3:],

29 'cp': e[0:3] + r + "E1"

30 }

31 print (zz)

32 return zz

33

34#获取解析json后的数据

35def get_html_data(target_url):

36 # 这里你换成你自己的请求头。直接复制代码,会报错!!!

37 headers = {"referer": "https://www.toutiao.com/",

38 "accept": "text/javascript, text/html, application/xml, text/xml, */*",

39 "content-type": "application/x-www-form-urlencoded",

40 "cookie": "tt_webid=6774555886024279565; s_v_web_id=76cec5f9a5c4ee50215b678a6f53dea5; WEATHER24279565; csrftoken=bb8c835711d848db5dc5445604d0a9e9; __tasessionId=gphokc0el1577327623076",

41 "user-agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36"}

42 response = requests.get(target_url, headers=headers)

43 res_data = json.loads(response.text)

44 return res_data

45

46# 解析数据,提取相关的字段

47def get_parse_data(max_behot_time, base_url, start_url,):

48 # 存放所有的今日头条新闻数据

49 excel_data = []

50

51 # 循环次数,相当于于刷新新闻的次数,正常情况下刷新一次会出现10条新闻,但也存在少于10条的情况;所以最后的结果并不一定是10的倍数

52 for i in range(3):

53 # 获取as和cp参数的函数

54 as_cp_args = get_as_cp_args()

55 # 拼接请求路径地址

56 targetUrl = start_url + max_behot_time + '&max_behot_time_tmp=' + max_behot_time + '&tadrequire=true&as=' + as_cp_args['as'] + '&cp=' + as_cp_args['cp']

57 res_data = get_html_data(targetUrl)

58 time.sleep(1)

59 toutiao_data = res_data['data']

60 for i in range(len(toutiao_data)):

61 toutiao = []

62 toutiao_title = toutiao_data[i]['title'] # 头条新闻标题

63 toutiao_source_url = toutiao_data[i]['source_url'] # 头条新闻链接

64 if "https" not in toutiao_source_url:

65 toutiao_source_url = base_url + toutiao_source_url

66 toutiao_source = toutiao_data[i]['source'] # 头条发布新闻的来源

67 toutiao_media_url = base_url + toutiao_data[i]['media_url'] # 头条发布新闻链接

68 toutiao.append(toutiao_title)

69 toutiao.append(toutiao_source_url)

70 toutiao.append(toutiao_source)

71 toutiao.append(toutiao_media_url)

72 excel_data.append(toutiao)

73 print(toutiao)

74 # 获取下一个链接的max_behot_time参数的值

75 max_behot_time = str(res_data['next']['max_behot_time'])

76

77 return excel_data

78

79# 数据保存到Excel 表格中中

80def save_data(excel_data):

81 header = ["新闻标题", "新闻链接", "头条号", "头条号链接"]

82 excel_data.insert(0, header)

83

84 workbook = xlwt.Workbook(encoding="utf-8", style_compression=0)

85 worksheet = workbook.add_sheet("sheet1", cell_overwrite_ok=True)

86 for i in range(len(excel_data)):

87 for j in range(len(excel_data[i])):

88 worksheet.write(i, j, excel_data[i][j])

89

90 workbook.save(r"今日头条热点新闻.xls")

91 print("今日头条新闻保存完毕!!")

92

93

94if __name__ == '__main__':

95 # 链接参数

96 max_behot_time = '0'

97 # 基础地址

98 base_url = 'https://www.toutiao.com'

99 # 请求的前半部分地址

100 start_url = 'https://www.toutiao.com/api/pc/feed/?category=news_hot&utm_source=toutiao&widen=1&max_behot_time='

101 toutiao_data = get_parse_data(max_behot_time, base_url, start_url)

102 save_data(toutiao_data)

103

104

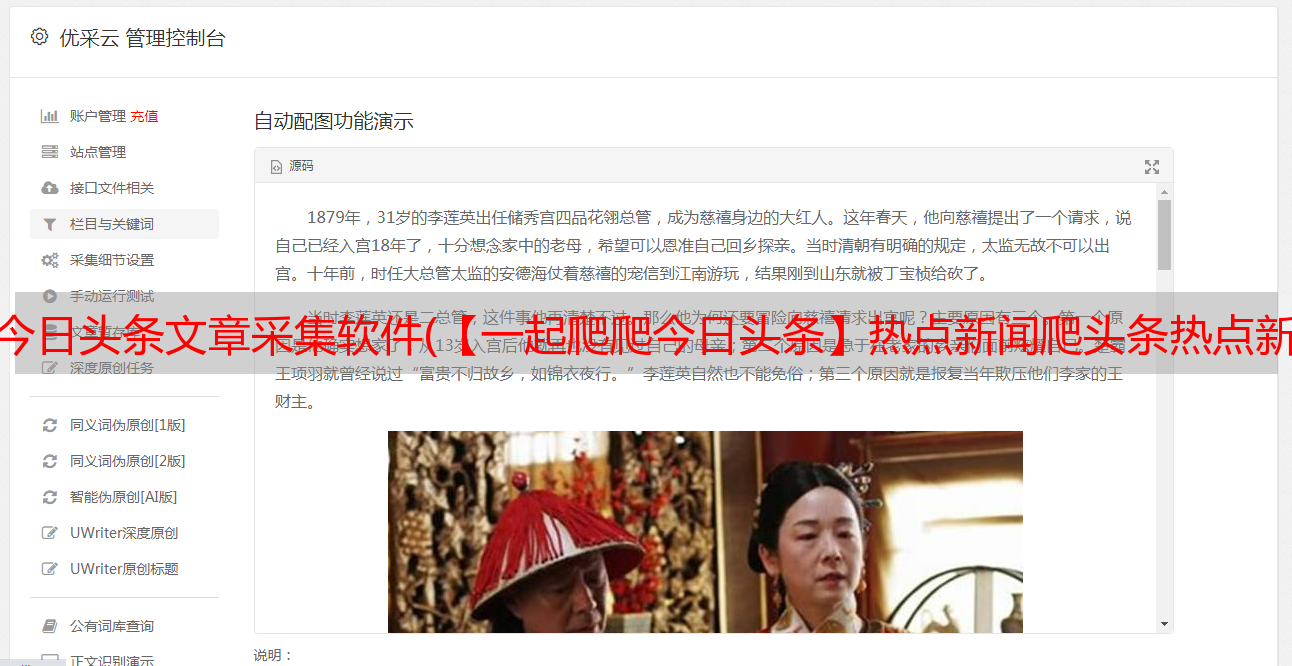

程序运行后的Excel表格截图: