文章采集完(知识点requestspprint开发环境版(一)--)

优采云 发布时间: 2021-12-06 22:12文章采集完(知识点requestspprint开发环境版(一)--)

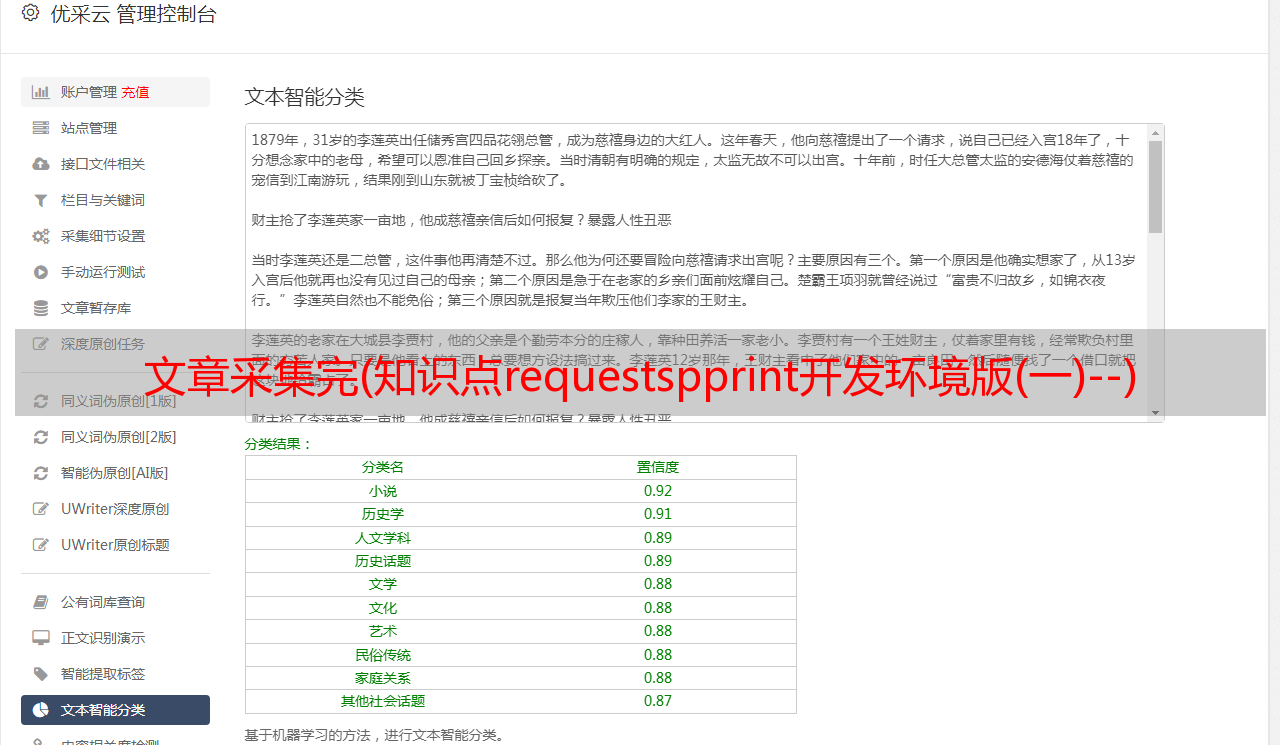

本文文章主要介绍Python爬虫采集微博视频数据,有一定的参考价值,感兴趣的朋友可以参考。希望大家看完这篇文章之后,学到了很多。小编带你一探究竟。

知识点

请求

打印

开发环境

版本:python 3.8

-编辑:pycharm 2021.2

爬取原理

功能:批量获取互联网数据(文字、图片、音频、视频)

本质:一次又一次的请求和响应

案例实现

1. 导入需要的模块

import requests

import pprint

2. 找到目标网址

打开开发者工具,选择Fetch/XHR,选择数据所在的标签,找到目标所在的url

3. 发送网络请求

headers = {

'cookie': '',

'referer': 'https://weibo.com/tv/channel/4379160563414111/editor',

'user-agent': '',

}

data = {

'data': '{"Component_Channel_Editor":{"cid":"4379160563414111","count":9}}'

}

url = 'https://www.weibo.com/tv/api/component?page=/tv/channel/4379160563414111/editor'

json_data = requests.post(url=url, headers=headers, data=data).json()

4. 获取数据

json_data_2 = requests.post(url=url_1, headers=headers, data=data_1).json()

5. 过滤数据

dict_urls = json_data_2['data']['Component_Play_Playinfo']['urls']

video_url = "https:" + dict_urls[list(dict_urls.keys())[0]]

print(title + "\t" + video_url)

6. 保存数据

video_data = requests.get(video_url).content

with open(f'video\\{title}.mp4', mode='wb') as f:

f.write(video_data)

print(title, "爬取成功................")

完整代码

import requests

import pprint

headers = {

'cookie': '添加自己的',

'referer': 'https://weibo.com/tv/channel/4379160563414111/editor',

'user-agent': '',

}

data = {

'data': '{"Component_Channel_Editor":{"cid":"4379160563414111","count":9}}'

}

url = 'https://www.weibo.com/tv/api/component?page=/tv/channel/4379160563414111/editor'

json_data = requests.post(url=url, headers=headers, data=data).json()

print(json_data)

ccs_list = json_data['data']['Component_Channel_Editor']['list']

next_cursor = json_data['data']['Component_Channel_Editor']['next_cursor']

for ccs in ccs_list:

oid = ccs['oid']

title = ccs['title']

data_1 = {

'data': '{"Component_Play_Playinfo":{"oid":"' + oid + '"}}'

}

url_1 = 'https://weibo.com/tv/api/component?page=/tv/show/' + oid

json_data_2 = requests.post(url=url_1, headers=headers, data=data_1).json()

dict_urls = json_data_2['data']['Component_Play_Playinfo']['urls']

video_url = "https:" + dict_urls[list(dict_urls.keys())[0]]

print(title + "\t" + video_url)

video_data = requests.get(video_url).content

with open(f'video\\{title}.mp4', mode='wb') as f:

f.write(video_data)

print(title, "爬取成功................")

感谢您仔细阅读这篇文章。希望小编文章分享的《如何Python爬虫采集微博视频资料》对大家有所帮助,同时也希望大家多多支持易速云,关注易速云产业信息通道。更多相关知识等你来学习!