网页爬虫抓取百度图片(知乎话题『美女』下所有问题中回答所出现的图片)

优采云 发布时间: 2021-11-16 07:05网页爬虫抓取百度图片(知乎话题『美女』下所有问题中回答所出现的图片)

#更新

声明:所有与性别、人脸颜值评分等直接或间接、明示或暗示相关的文字、图片及相关外部链接均由相关人脸检测界面给出。没有任何客观性,仅供参考。

1 数据来源

知乎“美女”主题下所有问题的答案中出现的图片

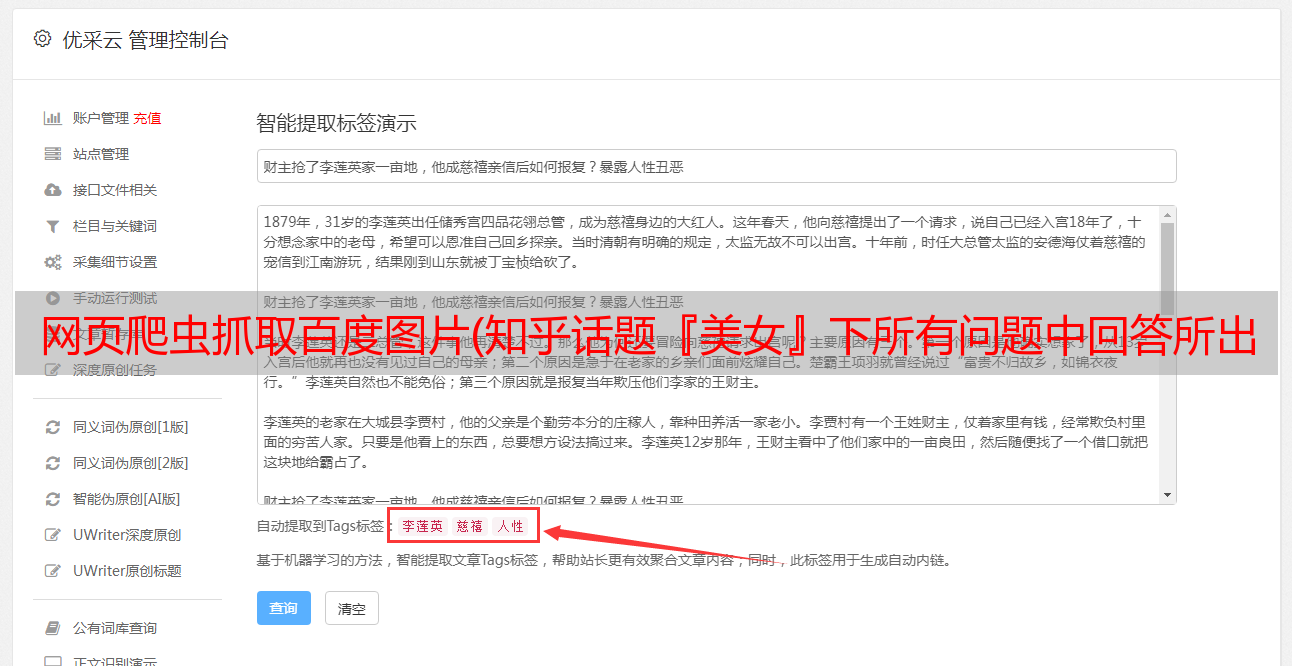

2 履带

Python 3,并使用第三方库Requests、lxml、AipFace,共100+行代码

3 必要的环境

Mac / Linux / Windows(Linux没测试过,理论上可以。Windows之前反应过异常,但检查后是Windows限制了本地文件名中的字符,使用了常规过滤),无需登录知乎(即无需提供知乎账号密码),人脸检测服务需要百度云账号(即百度云盘/贴吧账号)

4 人脸检测库

百度云AI开放平台提供的AipFace是一个可以进行人脸检测的Python SDK。可以直接通过HTTP访问,免费使用

5 测试过滤条件

6 实现逻辑

7 抓取结果

直接存放在文件夹中(angelababy实力出国)。另外,目前拍摄的照片中,除了baby,88分是最高分。我个人不同意排名,我的妻子不是最高分。

8码

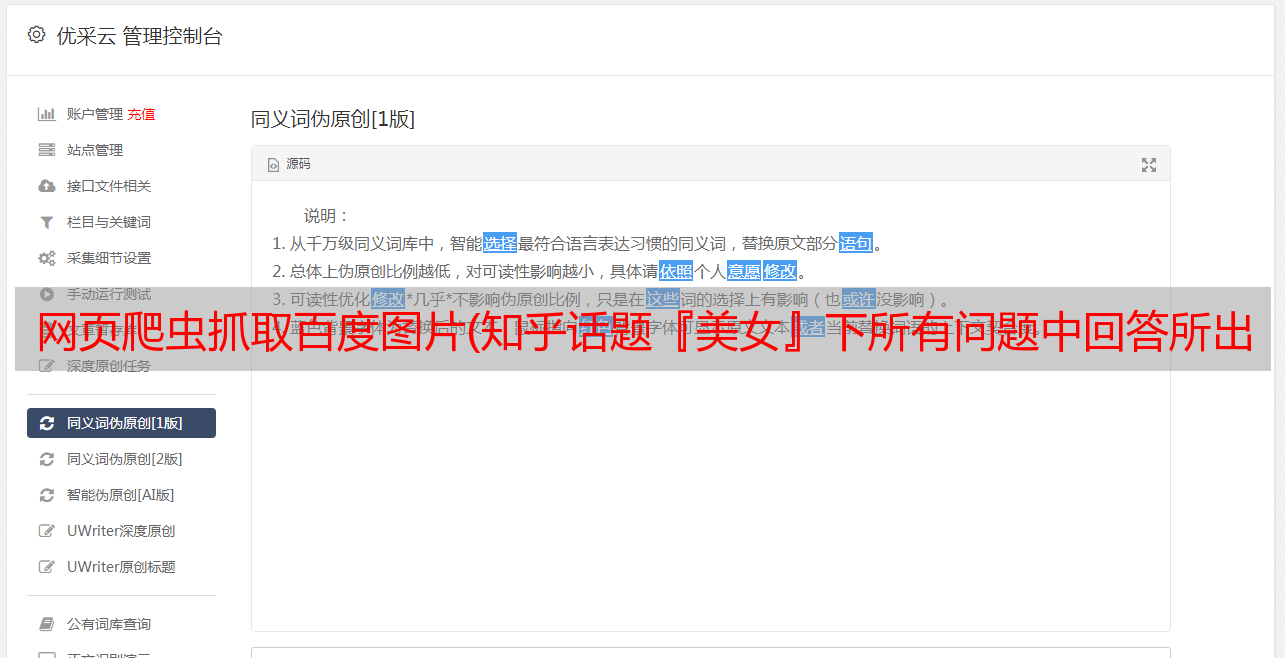

8. 1 直接使用百度云Python-SDK-去掉代码

8. 2 不使用SDK,直接构造HTTP请求版本。直接使用这个版本的好处是不依赖于SDK的版本(百度云现在有两个版本的接口-V2和V3。现阶段百度云同时支持这两个接口,所以直接用SDK没问题。以后百度不支持V2就得升级SDK或者用这个直接构造HTTP版本)

#coding: utf-8

import time

import os

import re

import requests

from lxml import etree

from aip import AipFace

#百度云 人脸检测 申请信息

#唯一必须填的信息就这三行

APP_ID = "xxxxxxxx"

API_KEY = "xxxxxxxxxxxxxxxxxxxxxxxx"

SECRET_KEY = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

# 文件存放目录名,相对于当前目录

DIR = "image"

# 过滤颜值阈值,存储空间大的请随意

BEAUTY_THRESHOLD = 45

#浏览器中打开知乎,在开发者工具复制一个,无需登录

#如何替换该值下文有讲述

AUTHORIZATION = "oauth c3cef7c66a1843f8b3a9e6a1e3160e20"

#以下皆无需改动

#每次请求知乎的讨论列表长度,不建议设定太长,注意节操

LIMIT = 5

#这是话题『美女』的 ID,其是『颜值』(20013528)的父话题

SOURCE = "19552207"

#爬虫假装下正常浏览器请求

USER_AGENT = "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/534.55.3 (KHTML, like Gecko) Version/5.1.5 Safari/534.55.3"

#爬虫假装下正常浏览器请求

REFERER = "https://www.zhihu.com/topic/%s/newest" % SOURCE

#某话题下讨论列表请求 url

BASE_URL = "https://www.zhihu.com/api/v4/topics/%s/feeds/timeline_activity"

#初始请求 url 附带的请求参数

URL_QUERY = "?include=data%5B%3F%28target.type%3Dtopic_sticky_module%29%5D.target.data%5B%3F%28target.type%3Danswer%29%5D.target.content%2Crelationship.is_authorized%2Cis_author%2Cvoting%2Cis_thanked%2Cis_nothelp%3Bdata%5B%3F%28target.type%3Dtopic_sticky_module%29%5D.target.data%5B%3F%28target.type%3Danswer%29%5D.target.is_normal%2Ccomment_count%2Cvoteup_count%2Ccontent%2Crelevant_info%2Cexcerpt.author.badge%5B%3F%28type%3Dbest_answerer%29%5D.topics%3Bdata%5B%3F%28target.type%3Dtopic_sticky_module%29%5D.target.data%5B%3F%28target.type%3Darticle%29%5D.target.content%2Cvoteup_count%2Ccomment_count%2Cvoting%2Cauthor.badge%5B%3F%28type%3Dbest_answerer%29%5D.topics%3Bdata%5B%3F%28target.type%3Dtopic_sticky_module%29%5D.target.data%5B%3F%28target.type%3Dpeople%29%5D.target.answer_count%2Carticles_count%2Cgender%2Cfollower_count%2Cis_followed%2Cis_following%2Cbadge%5B%3F%28type%3Dbest_answerer%29%5D.topics%3Bdata%5B%3F%28target.type%3Danswer%29%5D.target.content%2Crelationship.is_authorized%2Cis_author%2Cvoting%2Cis_thanked%2Cis_nothelp%3Bdata%5B%3F%28target.type%3Danswer%29%5D.target.author.badge%5B%3F%28type%3Dbest_answerer%29%5D.topics%3Bdata%5B%3F%28target.type%3Darticle%29%5D.target.content%2Cauthor.badge%5B%3F%28type%3Dbest_answerer%29%5D.topics%3Bdata%5B%3F%28target.type%3Dquestion%29%5D.target.comment_count&limit=" + str(LIMIT)

#指定 url,获取对应原始内容 / 图片

def fetch_image(url):

try:

headers = {

"User-Agent": USER_AGENT,

"Referer": REFERER,

"authorization": AUTHORIZATION

}

s = requests.get(url, headers=headers)

except Exception as e:

print("fetch last activities fail. " + url)

raise e

return s.content

#指定 url,获取对应 JSON 返回 / 话题列表

def fetch_activities(url):

try:

headers = {

"User-Agent": USER_AGENT,

"Referer": REFERER,

"authorization": AUTHORIZATION

}

s = requests.get(url, headers=headers)

except Exception as e:

print("fetch last activities fail. " + url)

raise e

return s.json()

#处理返回的话题列表

def process_activities(datums, face_detective):

for data in datums["data"]:

target = data["target"]

if "content" not in target or "question" not in target or "author" not in target:

continue

#解析列表中每一个元素的内容

html = etree.HTML(target["content"])

seq = 0

#question_url = target["question"]["url"]

question_title = target["question"]["title"]

author_name = target["author"]["name"]

#author_id = target["author"]["url_token"]

print("current answer: " + question_title + " author: " + author_name)

#获取所有图片地址

images = html.xpath("//img/@src")

for image in images:

if not image.startswith("http"):

continue

s = fetch_image(image)

#请求人脸检测服务

scores = face_detective(s)

for score in scores:

filename = ("%d--" % score) + author_name + "--" + question_title + ("--%d" % seq) + ".jpg"

filename = re.sub(r'(?u)[^-\w.]', '_', filename)

#注意文件名的处理,不同平台的非法字符不一样,这里只做了简单处理,特别是 author_name / question_title 中的内容

seq = seq + 1

with open(os.path.join(DIR, filename), "wb") as fd:

fd.write(s)

#人脸检测 免费,但有 QPS 限制

time.sleep(2)

if not datums["paging"]["is_end"]:

#获取后续讨论列表的请求 url

return datums["paging"]["next"]

else:

return None

def get_valid_filename(s):

s = str(s).strip().replace(' ', '_')

return re.sub(r'(?u)[^-\w.]', '_', s)

import base64

def detect_face(image, token):

try:

URL = "https://aip.baidubce.com/rest/2.0/face/v3/detect"

params = {

"access_token": token

}

data = {

"face_field": "age,gender,beauty,qualities",

"image_type": "BASE64",

"image": base64.b64encode(image)

}

s = requests.post(URL, params=params, data=data)

return s.json()["result"]

except Exception as e:

print("detect face fail. " + url)

raise e

def fetch_auth_token(api_key, secret_key):

try:

URL = "https://aip.baidubce.com/oauth/2.0/token"

params = {

"grant_type": "client_credentials",

"client_id": api_key,

"client_secret": secret_key

}

s = requests.post(URL, params=params)

return s.json()["access_token"]

except Exception as e:

print("fetch baidu auth token fail. " + url)

raise e

def init_face_detective(app_id, api_key, secret_key):

# client = AipFace(app_id, api_key, secret_key)

# 百度云 V3 版本接口,需要先获取 access token

token = fetch_auth_token(api_key, secret_key)

def detective(image):

#r = client.detect(image, options)

# 直接使用 HTTP 请求

r = detect_face(image, token)

#如果没有检测到人脸

if r is None or r["face_num"] == 0:

return []

scores = []

for face in r["face_list"]:

#人脸置信度太低

if face["face_probability"] < 0.6:

continue

#颜值低于阈值

if face["beauty"] < BEAUTY_THRESHOLD:

continue

#性别非女性

if face["gender"]["type"] != "female":

continue

scores.append(face["beauty"])

return scores

return detective

def init_env():

if not os.path.exists(DIR):

os.makedirs(DIR)

init_env()

face_detective = init_face_detective(APP_ID, API_KEY, SECRET_KEY)

url = BASE_URL % SOURCE + URL_QUERY

while url is not None:

print("current url: " + url)

datums = fetch_activities(url)

url = process_activities(datums, face_detective)

#注意节操,爬虫休息间隔不要调小

time.sleep(5)

# vim: set ts=4 sw=4 sts=4 tw=100 et:

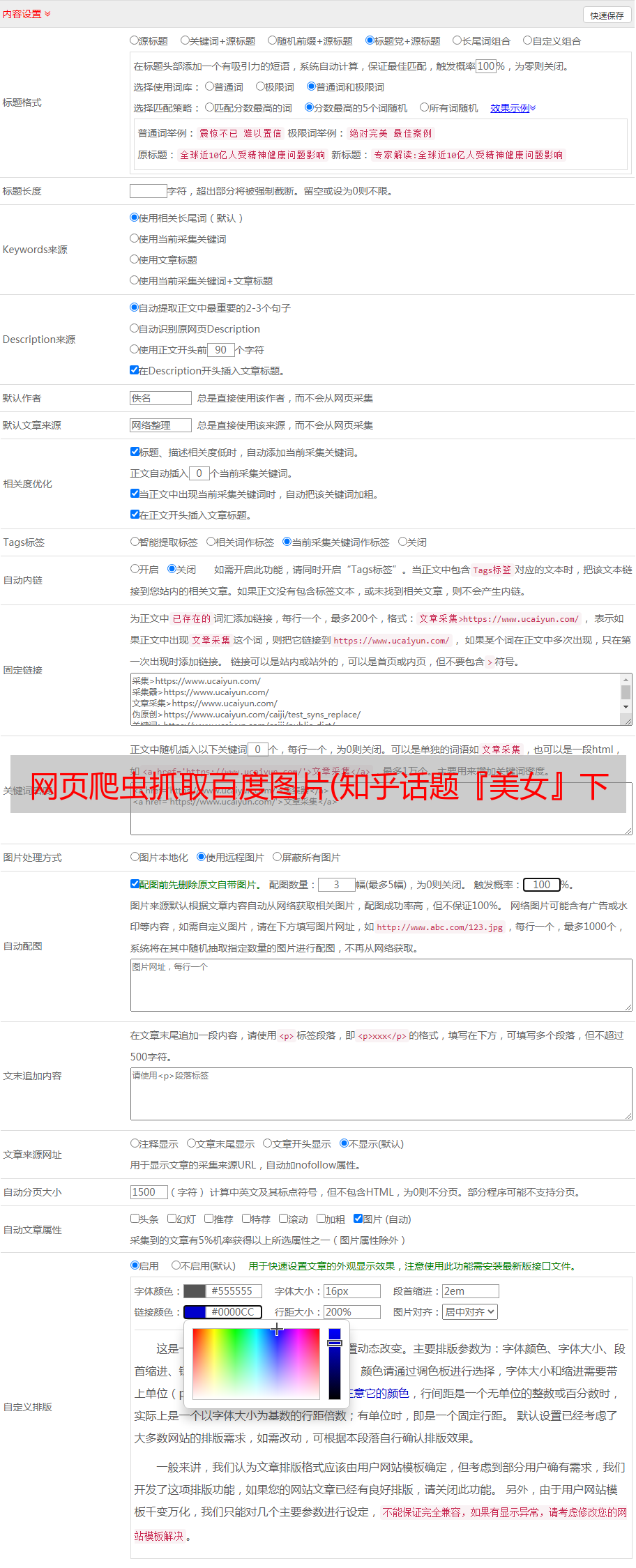

9 操作准备

需要登录,百度账号可以直接使用(贴吧/网盘通用),不用注册

随便填,这个阶段V3和V2都是默认勾选的。如果没有,请检查所有

在代码中填写AppID ApiKek SecretKey

{

"error": {

"message": "ZERR_NO_AUTH_TOKEN",

"code": 100,

"name": "AuthenticationInvalidRequest"

}

}

Chrome浏览器;找到一个知乎链接,点进去,打开开发者工具,查看HTTP请求头;无需登录

10 结论

欢迎关注我的专栏

面向薪资的编程-收录 Java 语言面试文章

编程基础和一些理论

编程的日常娱乐——用Python做一些有趣的事情