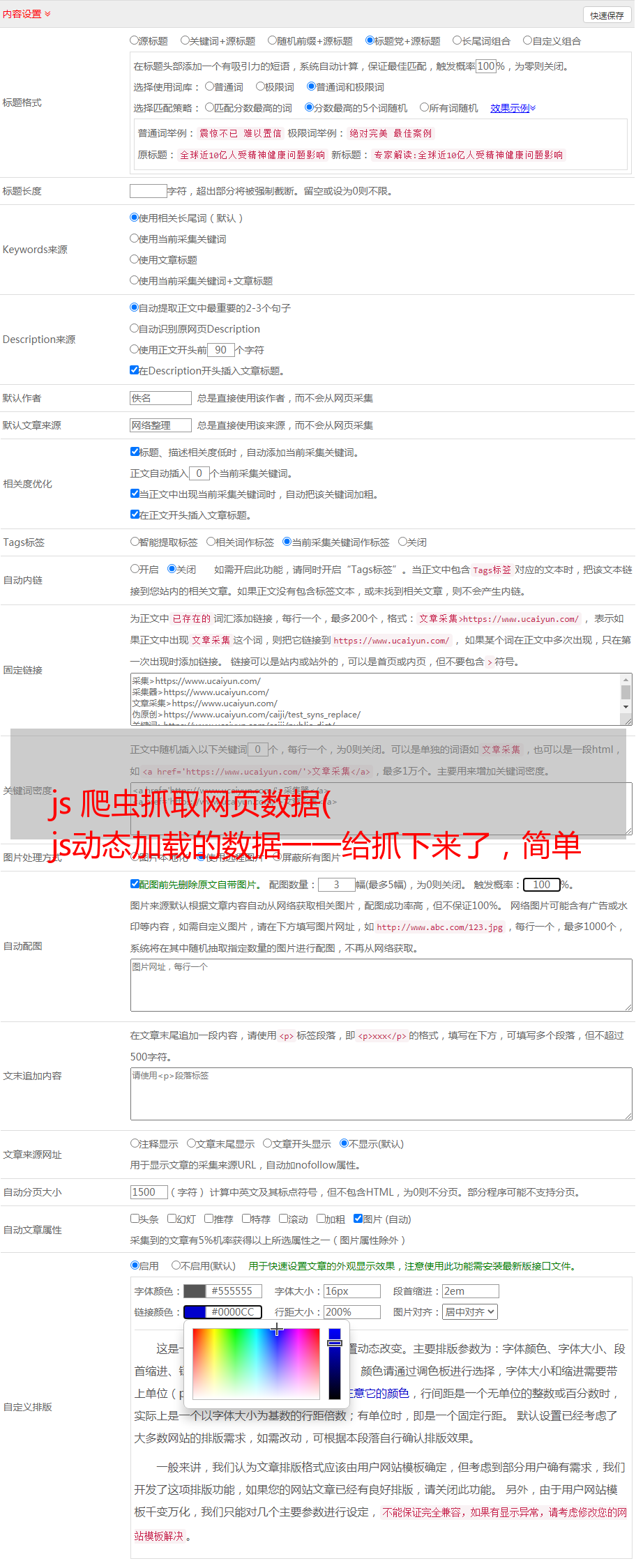

js 爬虫抓取网页数据( js动态加载的数据一一给抓下来了,简单粗暴直接抓取失败 )

优采云 发布时间: 2021-10-17 20:05js 爬虫抓取网页数据(

js动态加载的数据一一给抓下来了,简单粗暴直接抓取失败

)

如何绕过登录和抓取js动态加载网页数据[Python]

时间:2018-11-24 ┊阅读:2,670 次┊ 标签:开发、编程、体验

今天折腾了很多,把需要登录网站的数据和js动态加载的数据一一抓取。

首先,登录时有cookie,我们需要保存cookie,并在用urllib2构造请求时添加头信息。这时候多了一点,编造了浏览器信息,让服务器认为是普通浏览器发起的请求。绕过简单的反爬虫策略。

我用cookies登录完成后,发现网页被js动态加载了,抓取失败!

我搜索了一下,发现了两种方法:

使用工具,构建webdriver,使用chrome或firefox打开网页,但缺点是效率太低。分析网页加载过程,通过响应信息找到网页加载时调用的api或服务地址。这样比较麻烦和麻烦。

在几十个请求中,终于找到了后台加载数据服务的地址,找到了pattern,然后用id拼接完整的地址来构造请求。

服务端返回的数据竟然不是json数据,而是xml。我很快研究了xml解析方法。我选择了minidom来解析,感觉很舒服。

然后我遇到了空标签的问题。当网页没有评论时,当标签为空时,会报错,因为直接访问列表时,会报out of index错误。简单粗暴,直接尝试然后跳过。

抓到的数据写入csv,下载的附件保存在id创建的小文件夹中。

时间格式化,打印出收录毫秒的长时字符,与机器人测试工具的输出提示一致。

最后删除中间生成的临时xml文件。哈哈

代码:

#!/usr/bin/python

# -*- coding:utf-8 -*-

import urllib2

import xml.dom.minidom

import os

import csv

import time

def get_timestamp():

ct = time.time()

local_time = time.localtime(ct)

time_head = time.strftime("%Y%m%d %H:%M:%S", local_time)

time_secs = (ct - long(ct)) * 1000

timestamp = "%s.d" % (time_head, time_secs)

return timestamp

# create request user-agent header

userAgent = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36'

cookie = '...0000Y3Pwq2s9BdZn8e0zpTDmkVv:-1...'

uaHeaders = {'User-Agent': userAgent, 'Cookie': cookie}

# item url string connection

itemUrlHead = "https://api.amkevin.com:8888/ccm/service/com.amkevin.team.workitem.common.internal.rest.IWorkItemRestService/workItemDT88?includeHistory=false&id="

itemUrlId = "99999"

itemUrlTail = "&projectAreaItemId=_hnbI8sMnEeSExMMeBFetbg"

itemUrl = itemUrlHead + itemUrlId + itemUrlTail

# send request with user-agent header

request = urllib2.Request(itemUrl, headers=uaHeaders)

response = urllib2.urlopen(request)

xmlResult = response.read()

# prepare the xml file

curPath = os.getcwd()

curXml = curPath + '/' + itemUrlId + '.xml'

if os.path.exists(curXml):

os.remove(curXml)

curAttObj = open(curXml, 'w')

curAttObj.write(xmlResult)

curAttObj.close()

# print xmlItem

# parse response xml file

DOMTree = xml.dom.minidom.parse(curXml)

xmlDom = DOMTree.documentElement

# prepare write to csv

csvHeader = ["ID", "Creator", "Creator UserID", "Comment Content", "Creation Date"]

csvRow = []

csvCmtOneFile = curPath + '/rtcComment.csv'

if not os.path.exists(csvCmtOneFile):

csvObj = open(csvCmtOneFile, 'w')

csvWriter = csv.writer(csvObj)

csvWriter.writerow(csvHeader)

csvObj.close()

# get comments & write to csv file

items = xmlDom.getElementsByTagName("items")

for item in items:

try:

if item.hasAttribute("xsi:type"):

curItem = item.getAttribute("xsi:type")

if curItem == "workitem.restDTO:CommentDTO":

curCommentContent = item.getElementsByTagName("content")[0].childNodes[0].data

curCommentContent = curCommentContent.replace('', '')

curCommentContent = curCommentContent.replace('', '')

curCommentCreationDate = item.getElementsByTagName("creationDate")[0].childNodes[0].data

curCommentCreator = item.getElementsByTagName("creator")[0]

curCommentCreatorName = curCommentCreator.getElementsByTagName("name")[0].childNodes[0].data

curCommentCreatorId = curCommentCreator.getElementsByTagName("userId")[0].childNodes[0].data

csvRow = []

csvRow.append(itemUrlId)

csvRow.append(curCommentCreatorName)

csvRow.append(curCommentCreatorId)

csvRow.append(curCommentContent)

csvRow.append(curCommentCreationDate)

csvObj = open(csvCmtOneFile, 'a')

csvWriter = csv.writer(csvObj)

csvWriter.writerow(csvRow)

csvObj.close()

# save the attachment file to local dir

curAttFile = curPath + '/' + itemUrlId

if not os.path.exists(curAttFile):

os.mkdir(curAttFile)

curAttFile = curPath + '/' + itemUrlId + '/' + itemUrlId + '.csv'

if os.path.exists(curAttFile):

curCsvObj = open(curAttFile, 'a')

curCsvWriter = csv.writer(curCsvObj)

curCsvWriter.writerow(csvRow)

else:

curCsvObj = open(curAttFile, 'w')

curCsvWriter = csv.writer(curCsvObj)

curCsvWriter.writerow(csvRow)

curCsvObj.close()

print get_timestamp() + " :" + " INFO :" + " write comments to csv success."

except:

print get_timestamp() + " :" + " INFO :" + " parse xml encountered empty element, skipped."

continue

# get attachment

linkDTOs = xmlDom.getElementsByTagName("linkDTOs")

for linkDTO in linkDTOs:

try:

if linkDTO.getElementsByTagName("target")[0].hasAttribute("xsi:type"):

curAtt = linkDTO.getElementsByTagName("target")[0].getAttribute("xsi:type")

if curAtt == "workitem.restDTO:AttachmentDTO":

curAttUrl = linkDTO.getElementsByTagName("url")[0].childNodes[0].data

curTarget = linkDTO.getElementsByTagName("target")[0]

curAttName = curTarget.getElementsByTagName("name")[0].childNodes[0].data

# save the attachment file to local dir

curAttFolder = curPath + '/' + itemUrlId

if not os.path.exists(curAttFile):

os.mkdir(curAttFolder)

curAttFile = curPath + '/' + itemUrlId + '/' + curAttName

curRequest = urllib2.Request(curAttUrl, headers=uaHeaders)

curResponse = urllib2.urlopen(curRequest)

curAttRes = curResponse.read()

if os.path.exists(curAttFile):

os.remove(curAttFile)

curAttObj = open(curAttFile, 'w')

curAttObj.write(curAttRes)

curAttObj.close()

print get_timestamp() + " :" + " INFO :" + " download attachment [" + curAttName + "] success."

except:

print get_timestamp() + " :" + " INFO :" + " parse xml encountered empty element, skipped."

continue

# print linkDTO

# delete temporary xml file

if os.path.exists(curXml):

os.remove(curXml)